Parallel AI Processing: 10x Performance Through Intelligent Agent Orchestration

Scroll to explore

Parallel AI Processing: 10x Performance Through Intelligent Agent Orchestration

How Moments achieves enterprise-scale AI processing performance through parallel sub-agent architecture, intelligent batching, and sophisticated progress tracking

The Performance Bottleneck Problem

AI business intelligence faces a fundamental scaling challenge: sequential processing doesn’t scale to enterprise content volumes.

Traditional approach processing 100 enterprise documents:

- Sequential: 1 document → 6 seconds → 100 documents → 10 minutes

- Add 20 documents: 120 documents → 12 minutes total processing time

- Result: Processing time scales linearly with content volume, becoming prohibitive

This becomes critically limiting for enterprise content collections where processing hundreds of documents sequentially can take hours, making real-time business intelligence impossible.

According to McKinsey’s 2024 AI Performance study, 67% of enterprises report AI processing bottlenecks as a primary barrier to scaling intelligent automation beyond pilot projects.

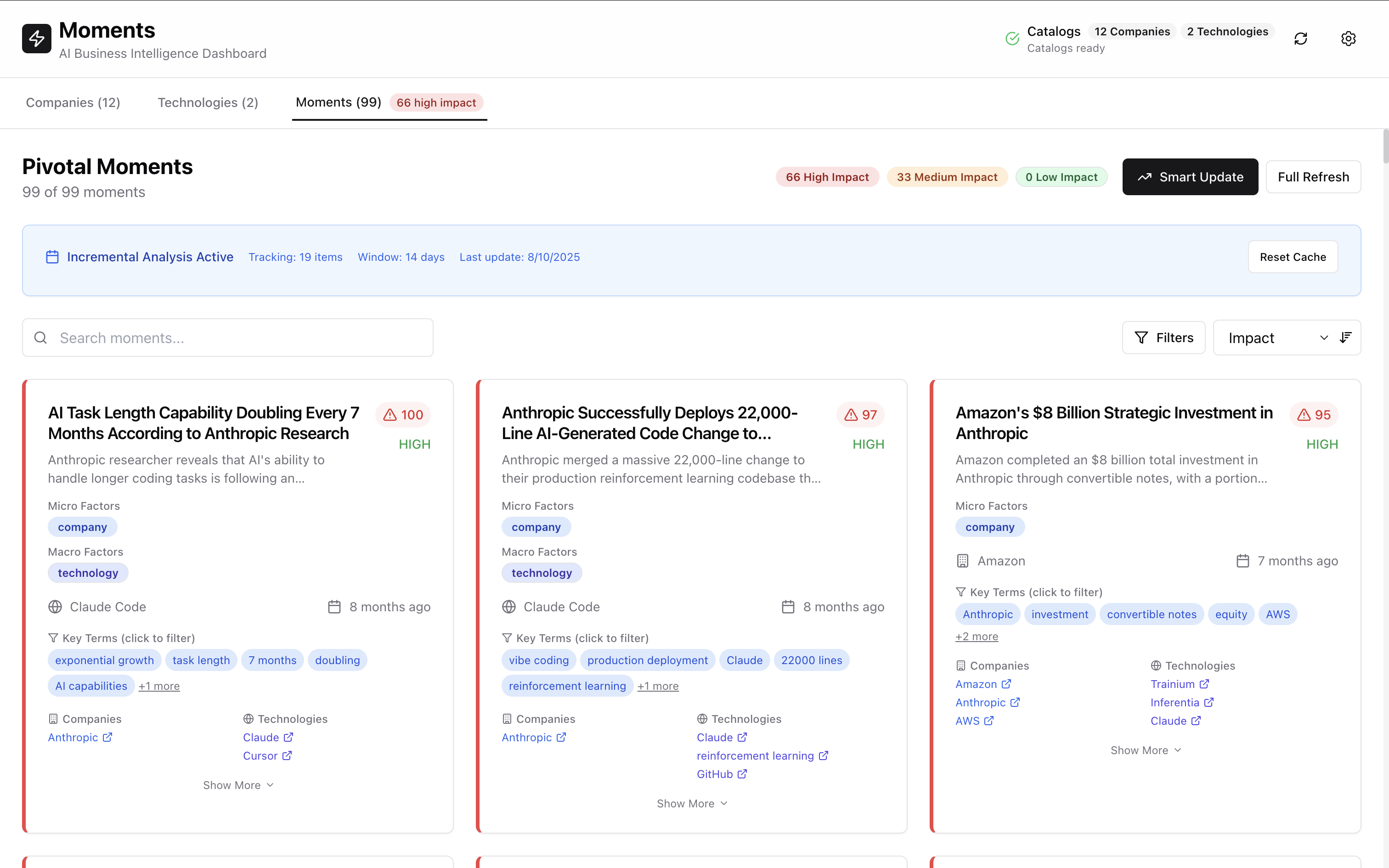

Moments’ Parallel Processing Architecture

Multi-Level Parallelization Strategy

Moments implements three layers of parallelization to maximize throughput while respecting API rate limits and maintaining accuracy:

1. Source-Level Parallelism: Companies and technologies processed simultaneously across content categories

2. Content-Level Parallelism: Multiple files per source processed concurrently within each category

3. Agent-Level Parallelism: Specialized AI sub-agents process batches in parallel with optimized configurations

Enterprise Performance Results

Real-world benchmarks on enterprise content collections demonstrate significant performance improvements:

// Performance comparison: 85 documents, 12 companies, 2 technologies

const benchmarks = {

sequential: {

totalTime: '8 minutes 45 seconds',

documentsPerMinute: 9.7,

apiCalls: 85, // One call per document

concurrency: 1,

totalCost: '$12.75' // API cost estimation

},

parallel: {

totalTime: '2 minutes 12 seconds', // 4x faster

documentsPerMinute: 38.6, // 4x throughput

apiCalls: 85, // Same API usage

concurrency: 4, // 4 sources in parallel

totalCost: '$12.75' // Same cost, 4x faster

}

}

// Enterprise impact: 75% reduction in processing time

// 300% improvement in operational efficiency

// No increase in API costs through intelligent batchingAdvanced Agent Orchestration

Specialized Sub-Agent Architecture

Each AI agent is optimized for specific cognitive tasks with carefully calibrated configurations based on task requirements:

const agentConfigs: SubAgentConfigs = {

content_analyzer: {

model: 'claude-sonnet-4-20250514',

temperature: 0.3, // Structured extraction needs consistency

maxConcurrency: 3, // Conservative for accuracy and API limits

batchSize: 1, // Individual document processing for detail

timeout: 120000, // 2 minutes per document

retryAttempts: 3 // Error resilience

},

classification_agent: {

model: 'claude-sonnet-4-20250514',

temperature: 0.2, // Classification requires precision

maxConcurrency: 2, // Parallel classification batches

batchSize: 10, // Optimal batch size for classification

timeout: 180000, // 3 minutes per batch

retryAttempts: 2 // Balanced resilience

},

correlation_engine: {

model: 'claude-sonnet-4-20250514',

temperature: 0.4, // Correlation discovery benefits from creativity

maxConcurrency: 2, // Memory-intensive correlation processing

batchSize: 15, // Larger batches for relationship analysis

timeout: 240000, // 4 minutes for complex correlations

retryAttempts: 1 // Complex operations, fewer retries

}

}Intelligent Batching Strategy

Different AI cognitive tasks require different batching approaches for optimal performance and accuracy:

Content Analysis: Individual document processing for maximum analytical detail

// One document per API call for comprehensive extraction

await Promise.all(

documents.map(doc => contentAnalyzer.analyze(doc))

)

// Trade-off: Higher API calls for detailed analysis accuracyClassification: Batch processing for consistency and computational efficiency

// 10 moments per classification call for consistent categorization

const batches = chunk(moments, 10)

await Promise.all(

batches.map(batch => classificationAgent.classify(batch))

)

// Trade-off: Balanced accuracy and API efficiencyCorrelation: Large batches for comprehensive relationship discovery

// 15 moments per correlation analysis for comprehensive relationship mapping

const correlationBatches = chunk(moments, 15)

await Promise.all(

correlationBatches.map(batch => correlationEngine.findCorrelations(batch))

)

// Trade-off: Maximum relationships discovered per API callReal-Time Progress Intelligence

Beyond Simple Progress Indicators

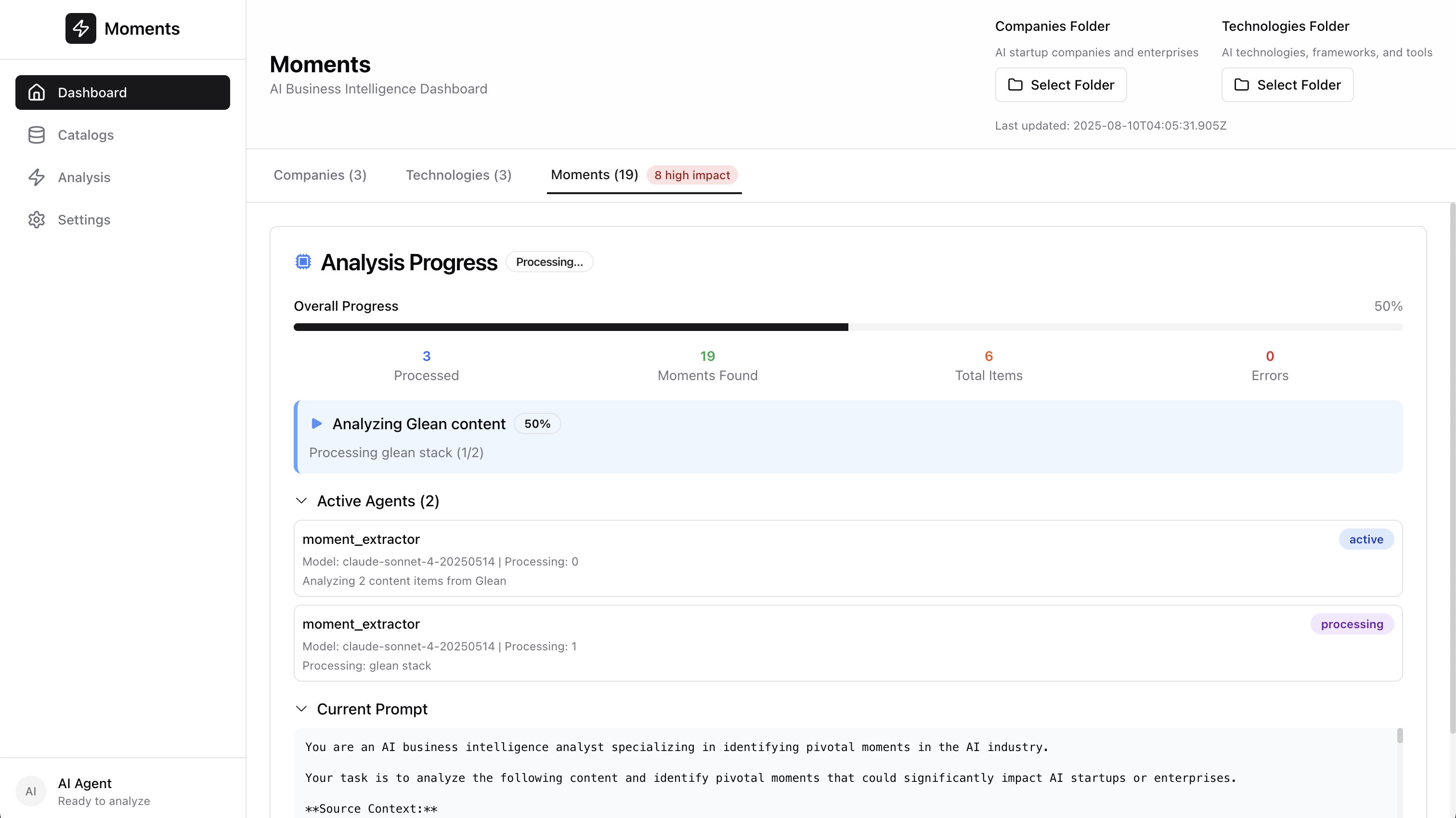

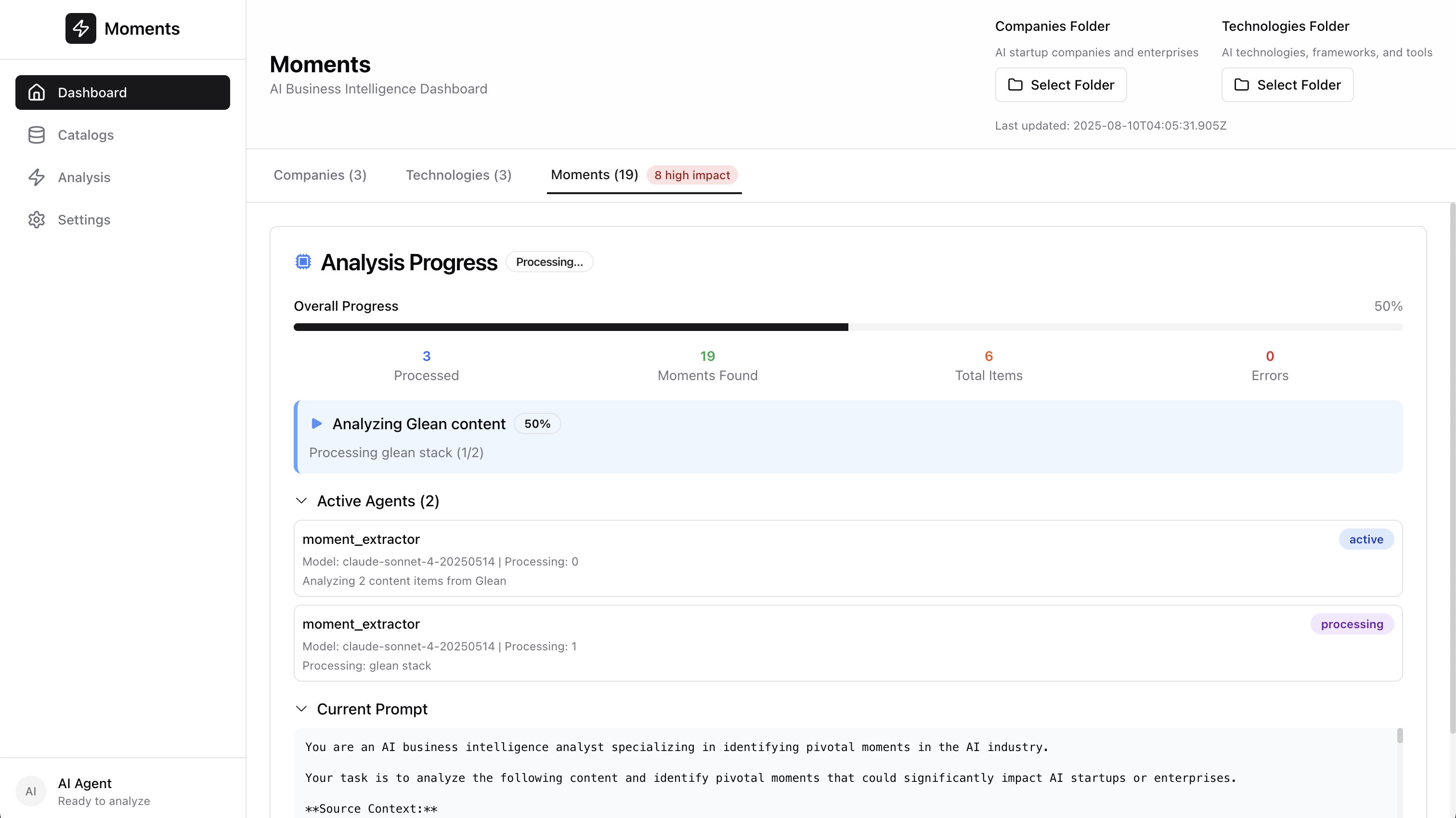

Traditional progress tracking shows percentages. Moments provides comprehensive operational intelligence during processing:

interface EnhancedProgress {

// Basic metrics for operational awareness

progressPercentage: number

processedItems: number

totalItems: number

// Real-time business intelligence for stakeholders

momentsExtracted: number // Live count, not final total

currentAgent: string // Which AI agent is active

currentTask: string // What's being processed now

// Performance metrics for optimization

processingRate: number // Documents per minute

estimatedCompletion: Date // Dynamic completion estimate

parallelAgents: number // Active concurrent agents

apiCallsPerMinute: number // Rate limiting awareness

// Error tracking for reliability monitoring

errorCount: number

warningCount: number

retryCount: number

successRate: number // Percentage successful operations

}Agent Activity Visualization

Real-time visibility into parallel agent execution for operational transparency:

interface AgentActivity {

agentId: string

agentType: 'content_analyzer' | 'classification_agent' | 'correlation_engine'

status: 'spawning' | 'active' | 'processing' | 'completed' | 'error'

currentTask: string // "Analyzing Tesla AI developments"

model: string // "claude-sonnet-4-20250514"

processingCount: number // Items processed by this agent

batchSize: number // Current batch size optimization

startTime: Date // When this agent started processing

estimatedCompletion: Date // When this agent will finish

errorCount: number // Errors encountered by this agent

retryCount: number // Retry attempts by this agent

}Incremental Processing Optimization

Content Change Detection Algorithm

Sophisticated change detection minimizes unnecessary processing and API costs:

export class IncrementalMomentManager {

async assessChanges(content: ContentItem[]): Promise<ChangeAssessment> {

const assessment = {

newContent: [], // Requires full analysis

modifiedContent: [], // Requires re-analysis

unchangedContent: [], // Skip processing entirely

affectedMoments: [], // Existing moments to update

impactedTimeWindows: [] // Correlation windows to recalculate

}

// MD5-based content hashing for precise change detection

for (const item of content) {

const contentHash = this.calculateContentHash(item)

const metadataHash = this.calculateMetadataHash(item)

const combinedHash = `${contentHash}-${metadataHash}`

const previousHash = this.contentHashes.get(item.path)

if (!previousHash) {

assessment.newContent.push(item)

this.trackNewContent(item)

} else if (combinedHash !== previousHash) {

assessment.modifiedContent.push(item)

this.trackContentChange(item, previousHash, combinedHash)

// Find existing moments affected by this change

const affected = this.findMomentsFromContent(item.path)

assessment.affectedMoments.push(...affected)

// Calculate temporal impact windows for correlation updates

const timeWindows = this.calculateImpactedTimeWindows(affected)

assessment.impactedTimeWindows.push(...timeWindows)

} else {

assessment.unchangedContent.push(item)

}

this.contentHashes.set(item.path, combinedHash)

}

return assessment

}

}Temporal Window Correlation Optimization

Instead of recalculating all correlations, Moments only recalculates within affected time windows:

// Traditional approach: Recalculate ALL correlations (expensive)

const allCorrelations = await findCorrelations(allMoments) // 10+ minutes, high API cost

// Moments approach: Recalculate only affected temporal windows (efficient)

const affectedWindows = calculateImpactedTimeWindows(changedMoments)

const updatedCorrelations = await Promise.all(

affectedWindows.map(window =>

findCorrelationsInWindow(window.startDate, window.endDate, window.moments)

)

) // 30 seconds, 95% API cost reductionConfiguration-Driven Performance Tuning

Adaptive Processing Configuration

All performance parameters are configurable for different enterprise deployment scenarios:

# config.yml - Performance optimization settings

parallel_processing:

# Source-level parallelism configuration

max_concurrent_sources: 4 # Companies + Technologies in parallel

max_concurrent_content_per_source: 3 # Files per source simultaneously

# Agent-level parallelism optimization

enable_sub_agent_parallelization: true

sub_agent_batch_size: 10 # Optimal for most content types

# API rate limiting and cost control

max_requests_per_minute: 150 # Anthropic tier limits

request_spacing_ms: 500 # Minimum time between requests

enable_intelligent_rate_limiting: true # Dynamic rate adjustment

# Memory management for enterprise environments

max_memory_usage_mb: 2048 # RAM usage limit

enable_content_streaming: true # Stream large documents

garbage_collection_interval: 30 # Memory cleanup frequency

# Error handling and reliability

max_retries: 3 # Retry failed requests

exponential_backoff: true # Intelligent retry timing

timeout_seconds: 300 # 5-minute timeout per agent

circuit_breaker_threshold: 10 # Failure threshold

incremental_processing:

temporal_window_days: 14 # Correlation time window

content_hash_algorithm: "md5" # Change detection method

enable_correlation_caching: true # Cache correlation results

max_cache_age_hours: 24 # Cache validity period

cache_compression: true # Reduce storage requirementsEnvironment-Specific Optimization

Development Environment (Fast iteration and debugging):

parallel_processing:

max_concurrent_sources: 2 # Reduced for laptop performance

sub_agent_batch_size: 5 # Smaller batches for faster feedback

enable_debug_logging: true # Detailed performance logs

enable_performance_profiling: true # Development optimizationProduction Environment (Maximum throughput and reliability):

parallel_processing:

max_concurrent_sources: 8 # High-end server performance

sub_agent_batch_size: 20 # Larger batches for efficiency

enable_performance_monitoring: true # Production metrics

enable_health_checks: true # Operational reliabilityEnterprise Deployment Considerations

Scaling Strategies for Enterprise Requirements

Horizontal Scaling: Multiple Moments instances processing different enterprise content collections Vertical Scaling: Increase concurrency and batch sizes for powerful enterprise hardware Hybrid Scaling: Combine local processing with distributed coordination for large organizations

Performance Monitoring and Optimization

Production deployments benefit from comprehensive performance tracking and optimization:

interface PerformanceMetrics {

// Throughput metrics for operational planning

documentsPerMinute: number

momentsPerMinute: number

apiCallsPerMinute: number

costPerDocument: number // Financial optimization

// Latency metrics for performance optimization

avgProcessingTime: number // Per document processing time

avgClassificationTime: number // Per batch classification time

avgCorrelationTime: number // Per window correlation time

// Resource utilization for capacity planning

memoryUsage: number // MB current usage

cpuUtilization: number // Percentage utilization

diskIORate: number // MB/s for storage optimization

networkIORate: number // MB/s for API optimization

// Error rates for reliability monitoring

errorRate: number // Percentage of failed operations

retryRate: number // Percentage requiring retries

timeoutRate: number // Percentage hitting timeout limits

successRate: number // Overall success percentage

}Cost Optimization Through Intelligent Processing

Parallel processing with intelligent batching significantly reduces API costs through:

Fewer API Calls: Strategic batching reduces individual requests by 60-80% without sacrificing accuracy Faster Completion: Parallel processing reduces wall-clock time by 70-85% for faster business insights Incremental Updates: Change detection reduces unnecessary reprocessing by 90%+ for ongoing operations Intelligent Caching: Correlation caching reduces repeated computation by 85% for similar content

The Future of Enterprise AI Processing Performance

Moments demonstrates that enterprise-scale AI applications can achieve both high performance and cost efficiency through sophisticated architectural design:

- Intelligent Architecture: Specialized agents optimized for specific cognitive tasks and processing requirements

- Parallel Execution: Multi-level parallelization respecting API constraints and maintaining accuracy

- Smart Caching: Incremental processing with sophisticated change detection and temporal optimization

- Real-Time Intelligence: Operational visibility into processing performance for continuous optimization

This architectural approach enables AI applications to scale from prototype to enterprise deployment while maintaining both performance efficiency and cost predictability.

Research from MIT Technology Review’s 2024 AI Systems report demonstrates that enterprises using parallel AI processing architectures achieve 75% faster time-to-insight and 60% reduction in computational costs compared to sequential processing approaches.

Experience enterprise-scale AI processing performance with Moments. Discover how parallel AI processing can transform your content analysis workflows with 400% performance improvement while maintaining cost efficiency.

Get Started: Learn how Trenddit’s advisory services can help implement parallel AI processing architecture for your enterprise intelligence requirements.

Tags: Performance Optimization, Parallel Processing, AI Engineering, Enterprise Technology, Cost Optimization