Complete Privacy with Ollama: Local AI Processing in Trenddit Memo

Scroll to explore

For the ultimate in privacy and data control, Trenddit Memo integrates seamlessly with Ollama to provide 100% local AI processing. Your content never leaves your device, yet you still get powerful AI analysis and organization.

This is privacy-first AI at its finest—all the intelligence, none of the surveillance.

Complete Privacy with Ollama

100% Local AI Processing

For maximum privacy, use Ollama to run AI models entirely on your machine:

Complete offline operation:

- No data ever sent to external servers

- All AI processing happens on your device

- Full functionality without internet connection

- Zero ongoing costs after initial setup

Why Choose Local AI?

Uncompromising privacy:

- Air-gapped processing: Content never leaves your network

- Audit-friendly: Complete transparency in data handling

- Compliance-ready: Meets strictest privacy regulations

- Cost-effective: No per-request charges after setup

Perfect for sensitive content:

- Business strategy: Competitive research and planning

- Personal information: Health, finance, or family content

- Regulated industries: Legal, medical, or compliance-sensitive work

- Confidential projects: Anything requiring complete data isolation

Ollama Integration Features

Enhanced Ollama Integration

Seamless local processing:

- Automatic model detection: Discovers your installed models

- Robust retry logic: Handles connection issues gracefully

- Performance optimization: Smart caching and batching

- Error recovery: Comprehensive failure handling

Supported Models

Run industry-standard models locally:

- Llama 3.1: Meta’s latest large language model

- Mistral: High-performance multilingual models

- CodeLlama: Specialized for code analysis and generation

- Phi-3: Microsoft’s efficient small language models

- Gemma: Google’s lightweight yet powerful models

Model Management

Easy model handling:

- One-click installation: Download and install models directly

- Version control: Manage different model versions

- Resource monitoring: Track CPU, memory, and storage usage

- Performance tuning: Optimize models for your hardware

Local Processing Workflow

When to Use Local Processing

Ideal scenarios for Ollama:

- Sensitive business content: Competitive research, strategic planning

- Personal information: Health, finance, or family-related content

- Compliance requirements: Industries with strict data regulations

- Confidential work: Legal, medical, or consulting projects

Local processing workflow:

Sensitive Content → Local Capture → Ollama Processing → Local Storage

↓ ↓ ↓ ↓

Private Web Your Device Your AI Model Your Data

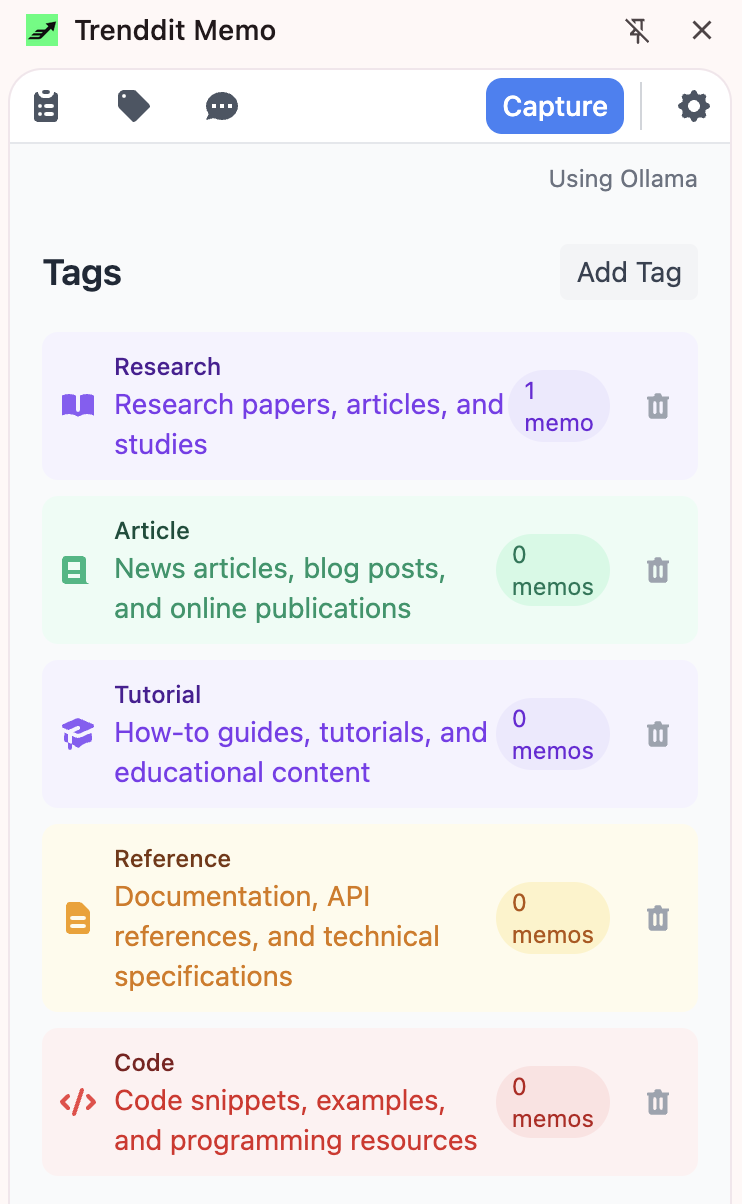

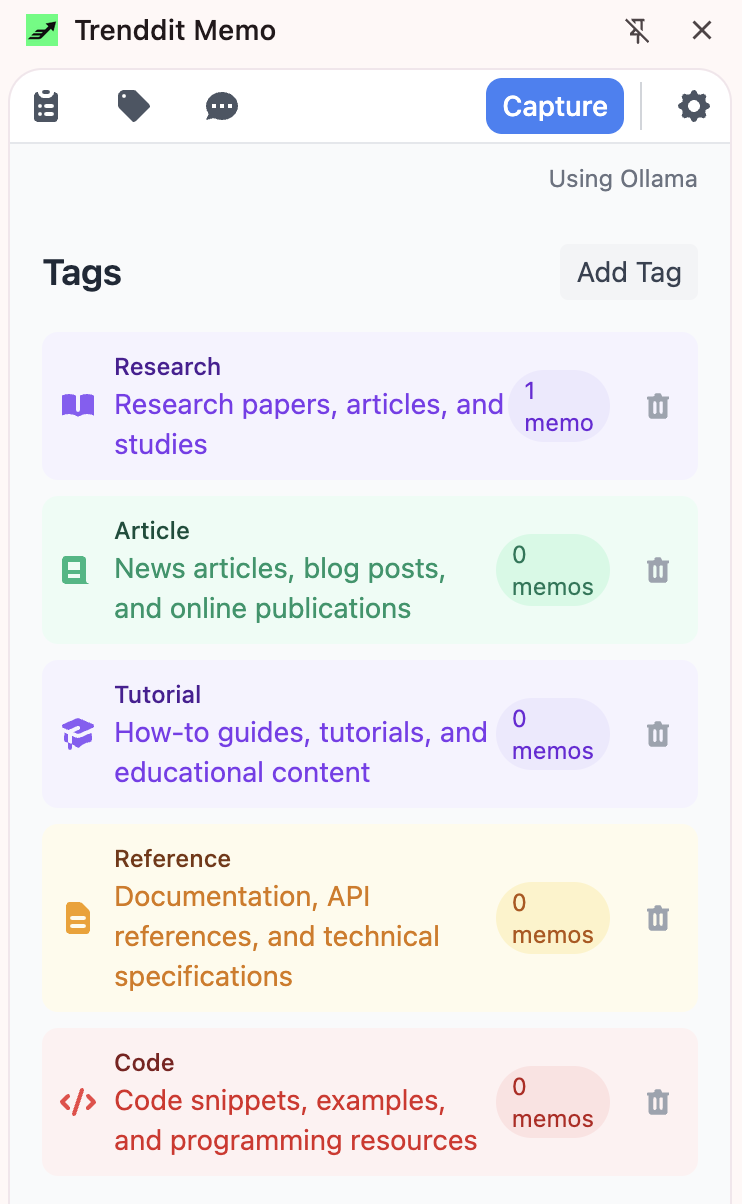

Content Only Only OnlyContent Analysis with Local AI

Powerful analysis, complete privacy:

- Content summarization: Extract key points without cloud processing

- Intelligent tagging: Organize content using local AI analysis

- Question answering: Query your knowledge base privately

- Content connections: Find relationships between saved items

Hybrid Processing Strategy

Best of both worlds:

- Public content: Use cloud providers for speed and latest features

- Sensitive content: Switch to Ollama for complete privacy

- Smart routing: Automatically choose provider based on content sensitivity

- Flexible configuration: Set different defaults for different contexts

Technical Implementation

System Requirements

Recommended specifications:

- CPU: 8+ cores for optimal performance

- RAM: 16GB+ for larger models

- Storage: 50GB+ for model storage

- OS: macOS, Linux, or Windows with WSL2

Installation and Setup

Getting started with Ollama:

- Install Ollama: Download from ollama.ai

- Pull models:

ollama pull llama3.1or your preferred model - Configure Trenddit Memo: Select Ollama as your AI provider

- Start processing: Your content stays completely local

Performance Optimization

Maximize local processing speed:

- Model selection: Choose appropriate model size for your hardware

- Batch processing: Group similar requests for efficiency

- Caching: Store frequently accessed results locally

- Resource management: Balance model performance with system resources

Privacy and Security Benefits

Zero External Dependencies

Complete independence:

- No internet required: Process content fully offline

- No API keys: No external service credentials needed

- No rate limits: Process as much content as you want

- No service outages: Always available when you need it

Data Sovereignty

Your data, your control:

- Geographic control: Data never crosses borders

- Legal compliance: Meet local data protection requirements

- Corporate policies: Satisfy enterprise security requirements

- Personal peace of mind: Know exactly where your data is

Audit and Compliance

Enterprise-ready privacy:

- Complete audit trail: Track all processing activities

- Data lineage: Understand how content flows through the system

- Compliance reporting: Generate detailed privacy reports

- Zero-trust verification: Verify no external data transmission

Advanced Local AI Features

Custom Model Integration

Tailor AI to your needs:

- Domain-specific models: Use specialized models for your field

- Fine-tuned models: Customize models for your specific use cases

- Private model training: Train models on your proprietary data

- Model versioning: Manage different model versions for different tasks

Performance Monitoring

Optimize local processing:

- Resource utilization: Monitor CPU, memory, and GPU usage

- Processing speed: Track analysis time and throughput

- Model efficiency: Compare performance across different models

- System health: Ensure optimal local processing environment

Batch Processing

Efficient content analysis:

- Queue management: Process multiple items efficiently

- Priority handling: Prioritize urgent content analysis

- Resource scheduling: Optimize processing during low-usage periods

- Progress tracking: Monitor batch processing status

The Future of Local AI

Evolving Capabilities

Local AI is getting better:

- Smaller, faster models: More efficient processing with less hardware

- Specialized models: Task-specific models for better results

- Hardware acceleration: GPU and neural processing unit support

- Edge computing: Distributed processing while maintaining privacy

Trenddit Memo’s Local AI Roadmap

Upcoming enhancements:

- Enhanced model management: Easier model installation and updates

- Advanced privacy controls: Even more granular privacy settings

- Performance optimization: Faster processing with better resource usage

- Custom model training: Tools for creating domain-specific models

Getting Started with Local AI

Quick Setup Guide

Start processing locally in minutes:

- Install Ollama: Download and install on your system

- Download a model:

ollama pull llama3.1:8bfor a balanced model - Configure Trenddit Memo: Set Ollama as your preferred provider

- Test processing: Capture some content and analyze it locally

- Optimize settings: Adjust model and performance settings

Best Practices

Maximize your local AI experience:

- Start small: Begin with smaller models and scale up

- Monitor resources: Keep an eye on system performance

- Update regularly: Keep Ollama and models updated

- Backup configurations: Save your model and provider settings

Complete Privacy, Full Power

With Ollama integration, Trenddit Memo proves that privacy and functionality aren’t mutually exclusive:

Local AI advantages:

- ✅ 100% local processing - No data ever leaves your device

- ✅ Complete offline capability - Work without internet

- ✅ No ongoing costs - No per-request charges

- ✅ Enterprise compliance - Meets strictest privacy requirements

- ✅ Full control - Your models, your rules

Privacy without compromise:

- Process sensitive content with confidence

- Meet regulatory compliance requirements

- Maintain complete data sovereignty

- Enjoy unlimited AI processing

Ready to experience completely private AI? Learn about Trenddit Memo’s privacy-first design and discover how local-first architecture protects your data.

Get started with Trenddit Memo and Ollama →

Experience the future of private AI. Install Trenddit Memo and connect it to Ollama for completely local, completely private AI processing.