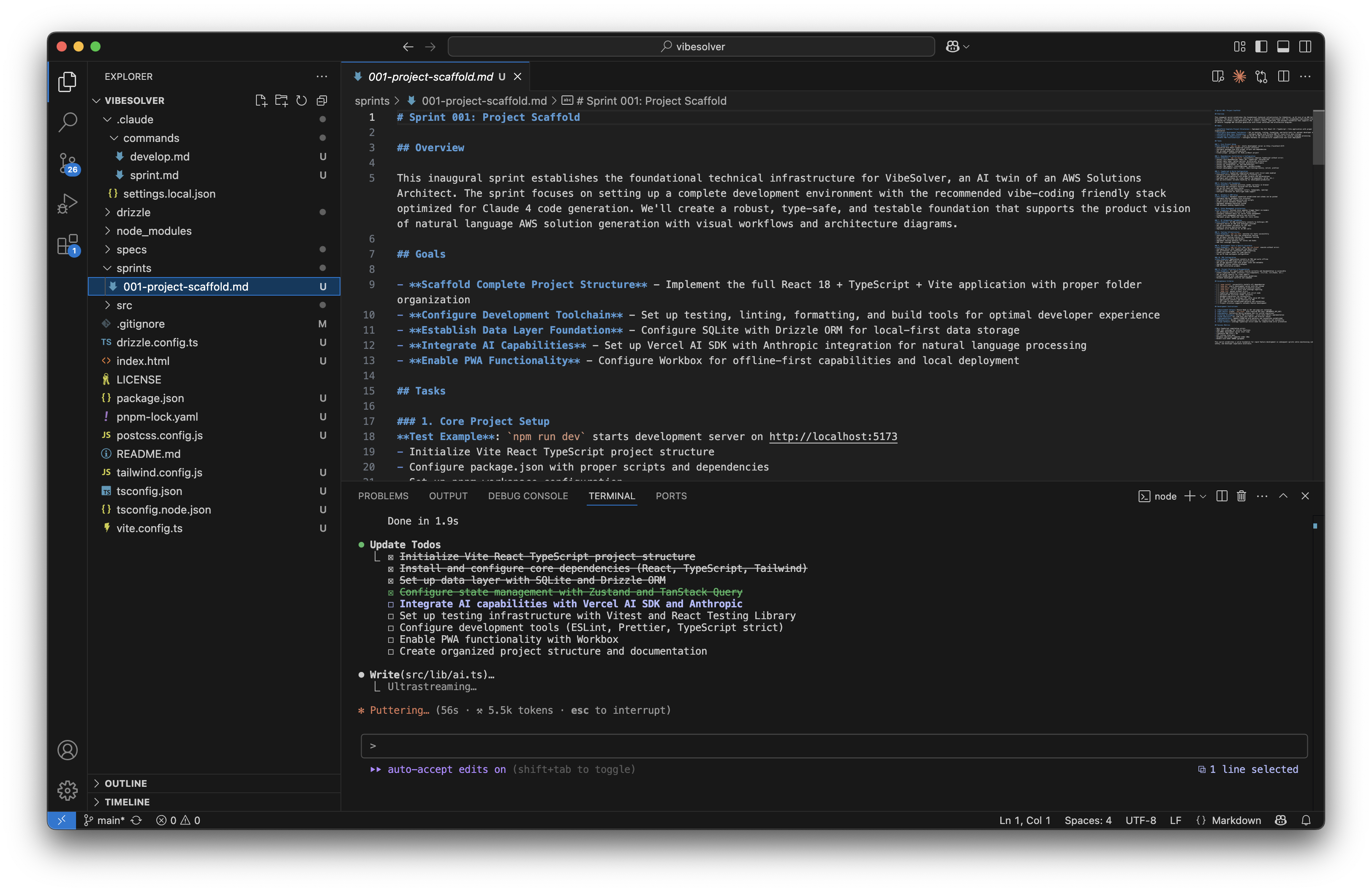

AI Development Tools & Infrastructure: Modern DevOps for AI-Powered Applications

Scroll to explore

Building AI-powered applications requires specialized development tools and infrastructure that can handle the unique challenges of AI integration, model management, and intelligent automation workflows.

This guide explores the essential development tools and infrastructure patterns that enable efficient AI application development and deployment.

AI Integration Layer

Service Architecture for AI Integration

Modern AI Service Layer:

# AI service abstraction for multiple providers

from abc import ABC, abstractmethod

from typing import Dict, Any, AsyncGenerator

from dataclasses import dataclass

import asyncio

import aiohttp

@dataclass

class AIResponse:

content: str

metadata: Dict[str, Any]

provider: str

model: str

usage: Dict[str, int]

class AIServiceProvider(ABC):

"""Abstract base class for AI service providers"""

@abstractmethod

async def generate_response(self, prompt: str, **kwargs) -> AIResponse:

pass

@abstractmethod

async def stream_response(self, prompt: str, **kwargs) -> AsyncGenerator[str, None]:

pass

class AnthropicProvider(AIServiceProvider):

def __init__(self, api_key: str):

self.api_key = api_key

self.base_url = "https://api.anthropic.com"

async def generate_response(self, prompt: str, **kwargs) -> AIResponse:

model = kwargs.get('model', 'claude-3.5-sonnet')

max_tokens = kwargs.get('max_tokens', 1000)

async with aiohttp.ClientSession() as session:

headers = {

'Authorization': f'Bearer {self.api_key}',

'Content-Type': 'application/json',

'anthropic-version': '2023-06-01'

}

payload = {

'model': model,

'max_tokens': max_tokens,

'messages': [{'role': 'user', 'content': prompt}]

}

async with session.post(f'{self.base_url}/v1/messages',

headers=headers, json=payload) as response:

if response.status == 200:

data = await response.json()

return AIResponse(

content=data['content'][0]['text'],

metadata=data.get('metadata', {}),

provider='anthropic',

model=model,

usage=data.get('usage', {})

)

else:

raise Exception(f"API request failed: {response.status}")

async def stream_response(self, prompt: str, **kwargs) -> AsyncGenerator[str, None]:

# Implementation for streaming responses

model = kwargs.get('model', 'claude-3.5-sonnet')

async with aiohttp.ClientSession() as session:

headers = {

'Authorization': f'Bearer {self.api_key}',

'Content-Type': 'application/json',

'anthropic-version': '2023-06-01'

}

payload = {

'model': model,

'max_tokens': kwargs.get('max_tokens', 1000),

'messages': [{'role': 'user', 'content': prompt}],

'stream': True

}

async with session.post(f'{self.base_url}/v1/messages',

headers=headers, json=payload) as response:

async for line in response.content:

if line:

decoded = line.decode('utf-8').strip()

if decoded.startswith('data: '):

yield decoded[6:] # Remove 'data: ' prefix

class AIServiceManager:

"""Manages multiple AI service providers with fallback"""

def __init__(self):

self.providers: Dict[str, AIServiceProvider] = {}

self.primary_provider = None

self.fallback_providers = []

def add_provider(self, name: str, provider: AIServiceProvider, is_primary: bool = False):

self.providers[name] = provider

if is_primary:

self.primary_provider = name

else:

self.fallback_providers.append(name)

async def generate_response(self, prompt: str, **kwargs) -> AIResponse:

# Try primary provider first

if self.primary_provider:

try:

return await self.providers[self.primary_provider].generate_response(prompt, **kwargs)

except Exception as e:

print(f"Primary provider failed: {e}")

# Try fallback providers

for provider_name in self.fallback_providers:

try:

return await self.providers[provider_name].generate_response(prompt, **kwargs)

except Exception as e:

print(f"Fallback provider {provider_name} failed: {e}")

raise Exception("All AI providers failed")API Integration Patterns

FastAPI Backend with AI Integration:

from fastapi import FastAPI, HTTPException, Depends, BackgroundTasks

from fastapi.middleware.cors import CORSMiddleware

from pydantic import BaseModel

from typing import Optional, List

import asyncio

from datetime import datetime

app = FastAPI(title="AI Development API", version="1.0.0")

# CORS middleware for development

app.add_middleware(

CORSMiddleware,

allow_origins=["http://localhost:3000", "http://localhost:4321"],

allow_credentials=True,

allow_methods=["*"],

allow_headers=["*"],

)

# Request/Response models

class AIRequest(BaseModel):

prompt: str

model: Optional[str] = "claude-3.5-sonnet"

max_tokens: Optional[int] = 1000

stream: Optional[bool] = False

class AIResponseModel(BaseModel):

content: str

provider: str

model: str

usage: dict

timestamp: datetime

# AI service dependency

def get_ai_service():

service_manager = AIServiceManager()

service_manager.add_provider(

"anthropic",

AnthropicProvider(api_key="your-api-key"),

is_primary=True

)

return service_manager

@app.post("/api/ai/generate", response_model=AIResponseModel)

async def generate_ai_response(

request: AIRequest,

ai_service: AIServiceManager = Depends(get_ai_service)

):

"""Generate AI response with error handling and logging"""

try:

response = await ai_service.generate_response(

prompt=request.prompt,

model=request.model,

max_tokens=request.max_tokens

)

return AIResponseModel(

content=response.content,

provider=response.provider,

model=response.model,

usage=response.usage,

timestamp=datetime.now()

)

except Exception as e:

raise HTTPException(status_code=500, detail=f"AI generation failed: {str(e)}")

@app.post("/api/ai/analyze")

async def analyze_content(

request: AIRequest,

background_tasks: BackgroundTasks,

ai_service: AIServiceManager = Depends(get_ai_service)

):

"""Analyze content with background processing"""

# Start background analysis

background_tasks.add_task(

process_analysis,

request.prompt,

ai_service

)

return {"message": "Analysis started", "status": "processing"}

async def process_analysis(content: str, ai_service: AIServiceManager):

"""Background task for content analysis"""

try:

analysis_prompt = f"""

Analyze the following content and provide:

1. Key themes and topics

2. Sentiment analysis

3. Content quality score

4. Improvement suggestions

Content: {content}

"""

response = await ai_service.generate_response(analysis_prompt)

# Store results in database or cache

# await store_analysis_results(content, response)

print(f"Analysis completed for content: {content[:50]}...")

except Exception as e:

print(f"Analysis failed: {e}")Development Tools and Infrastructure

Testing Framework for AI Applications

Comprehensive Testing Strategy:

# test_ai_services.py

import pytest

import asyncio

from unittest.mock import Mock, patch, AsyncMock

from ai_services import AIServiceManager, AnthropicProvider, AIResponse

class TestAIServiceManager:

"""Test suite for AI service management"""

@pytest.fixture

def mock_anthropic_provider(self):

provider = Mock(spec=AnthropicProvider)

provider.generate_response = AsyncMock()

provider.stream_response = AsyncMock()

return provider

@pytest.fixture

def service_manager(self, mock_anthropic_provider):

manager = AIServiceManager()

manager.add_provider("anthropic", mock_anthropic_provider, is_primary=True)

return manager

@pytest.mark.asyncio

async def test_successful_generation(self, service_manager, mock_anthropic_provider):

# Arrange

expected_response = AIResponse(

content="Test response",

metadata={"model": "claude-3.5-sonnet"},

provider="anthropic",

model="claude-3.5-sonnet",

usage={"input_tokens": 10, "output_tokens": 20}

)

mock_anthropic_provider.generate_response.return_value = expected_response

# Act

result = await service_manager.generate_response("Test prompt")

# Assert

assert result.content == "Test response"

assert result.provider == "anthropic"

mock_anthropic_provider.generate_response.assert_called_once_with("Test prompt")

@pytest.mark.asyncio

async def test_provider_fallback(self, service_manager, mock_anthropic_provider):

# Arrange

mock_fallback = Mock(spec=AnthropicProvider)

mock_fallback.generate_response = AsyncMock()

service_manager.add_provider("fallback", mock_fallback)

# Primary provider fails

mock_anthropic_provider.generate_response.side_effect = Exception("Primary failed")

# Fallback succeeds

expected_response = AIResponse(

content="Fallback response",

metadata={},

provider="fallback",

model="claude-3.5-sonnet",

usage={}

)

mock_fallback.generate_response.return_value = expected_response

# Act

result = await service_manager.generate_response("Test prompt")

# Assert

assert result.content == "Fallback response"

assert result.provider == "fallback"

@pytest.mark.asyncio

async def test_all_providers_fail(self, service_manager, mock_anthropic_provider):

# Arrange

mock_anthropic_provider.generate_response.side_effect = Exception("All failed")

# Act & Assert

with pytest.raises(Exception, match="All AI providers failed"):

await service_manager.generate_response("Test prompt")

# Integration tests

class TestAIAPIIntegration:

"""Integration tests for AI API endpoints"""

@pytest.fixture

def test_client(self):

from fastapi.testclient import TestClient

from main import app

return TestClient(app)

@patch('main.get_ai_service')

def test_generate_endpoint(self, mock_get_ai_service, test_client):

# Arrange

mock_service = Mock()

mock_service.generate_response = AsyncMock()

mock_service.generate_response.return_value = AIResponse(

content="Generated content",

metadata={},

provider="test",

model="test-model",

usage={"input_tokens": 5, "output_tokens": 10}

)

mock_get_ai_service.return_value = mock_service

# Act

response = test_client.post("/api/ai/generate", json={

"prompt": "Test prompt",

"model": "claude-3.5-sonnet"

})

# Assert

assert response.status_code == 200

data = response.json()

assert data["content"] == "Generated content"

assert data["provider"] == "test"

# Load testing for AI services

@pytest.mark.asyncio

@pytest.mark.load_test

async def test_concurrent_ai_requests():

"""Test AI service under concurrent load"""

service_manager = AIServiceManager()

# Mock provider for load testing

mock_provider = Mock(spec=AnthropicProvider)

mock_provider.generate_response = AsyncMock()

mock_provider.generate_response.return_value = AIResponse(

content="Load test response",

metadata={},

provider="mock",

model="test",

usage={}

)

service_manager.add_provider("mock", mock_provider, is_primary=True)

# Create multiple concurrent requests

tasks = []

for i in range(50): # 50 concurrent requests

task = service_manager.generate_response(f"Test prompt {i}")

tasks.append(task)

# Execute all requests concurrently

start_time = asyncio.get_event_loop().time()

results = await asyncio.gather(*tasks)

end_time = asyncio.get_event_loop().time()

# Assertions

assert len(results) == 50

assert all(result.content == "Load test response" for result in results)

# Performance assertion (should complete within reasonable time)

execution_time = end_time - start_time

assert execution_time < 5.0 # Should complete within 5 seconds

print(f"Load test completed in {execution_time:.2f} seconds")CI/CD Pipeline Configuration

GitHub Actions for AI Applications:

# .github/workflows/ai-app-ci-cd.yml

name: AI Application CI/CD

on:

push:

branches: [ main, develop ]

pull_request:

branches: [ main ]

env:

NODE_VERSION: '18'

PYTHON_VERSION: '3.11'

jobs:

test-frontend:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: ${{ env.NODE_VERSION }}

cache: 'npm'

- name: Install dependencies

run: npm ci

- name: Run TypeScript checks

run: npm run type-check

- name: Run linting

run: npm run lint

- name: Run frontend tests

run: npm run test:coverage

- name: Upload coverage reports

uses: codecov/codecov-action@v3

with:

file: ./coverage/lcov.info

flags: frontend

test-backend:

runs-on: ubuntu-latest

services:

postgres:

image: postgres:15

env:

POSTGRES_PASSWORD: postgres

POSTGRES_DB: test_ai_app

options: >-

--health-cmd pg_isready

--health-interval 10s

--health-timeout 5s

--health-retries 5

steps:

- uses: actions/checkout@v4

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: ${{ env.PYTHON_VERSION }}

cache: 'pip'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

pip install -r requirements-dev.txt

- name: Run backend tests

env:

DATABASE_URL: postgresql://postgres:postgres@localhost:5432/test_ai_app

ANTHROPIC_API_KEY: ${{ secrets.ANTHROPIC_API_KEY_TEST }}

run: |

pytest --cov=. --cov-report=xml --cov-report=html -v

- name: Run security scan

run: |

safety check

bandit -r . -f json -o bandit-report.json

- name: Upload coverage reports

uses: codecov/codecov-action@v3

with:

file: ./coverage.xml

flags: backend

ai-model-validation:

runs-on: ubuntu-latest

if: github.event_name == 'pull_request'

steps:

- uses: actions/checkout@v4

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: ${{ env.PYTHON_VERSION }}

- name: Install dependencies

run: |

pip install -r requirements.txt

pip install pytest

- name: Validate AI model integration

env:

ANTHROPIC_API_KEY: ${{ secrets.ANTHROPIC_API_KEY_TEST }}

run: |

pytest tests/test_ai_integration.py -v -m "not slow"

- name: Run AI response quality tests

env:

ANTHROPIC_API_KEY: ${{ secrets.ANTHROPIC_API_KEY_TEST }}

run: |

python scripts/test_ai_quality.py

build-and-deploy:

runs-on: ubuntu-latest

needs: [test-frontend, test-backend]

if: github.ref == 'refs/heads/main'

steps:

- uses: actions/checkout@v4

- name: Setup Node.js

uses: actions/setup-node@v4

with:

node-version: ${{ env.NODE_VERSION }}

cache: 'npm'

- name: Build frontend

run: |

npm ci

npm run build

- name: Setup Python

uses: actions/setup-python@v4

with:

python-version: ${{ env.PYTHON_VERSION }}

- name: Build backend

run: |

pip install -r requirements.txt

python -m pytest --collect-only # Validate app structure

- name: Deploy to staging

env:

DEPLOY_KEY: ${{ secrets.DEPLOY_KEY }}

STAGING_HOST: ${{ secrets.STAGING_HOST }}

run: |

# Deploy to staging environment

echo "Deploying to staging..."

# Add your deployment script here

- name: Run smoke tests

env:

STAGING_URL: ${{ secrets.STAGING_URL }}

run: |

# Run smoke tests against staging

python scripts/smoke_tests.py

- name: Deploy to production

if: success()

env:

DEPLOY_KEY: ${{ secrets.DEPLOY_KEY }}

PRODUCTION_HOST: ${{ secrets.PRODUCTION_HOST }}

run: |

# Deploy to production environment

echo "Deploying to production..."

# Add your production deployment script hereDocker Configuration for AI Applications

Multi-stage Dockerfile:

# Dockerfile for AI application

FROM node:18-alpine AS frontend-builder

WORKDIR /app/frontend

# Copy package files

COPY frontend/package*.json ./

RUN npm ci --only=production

# Copy source and build

COPY frontend/ ./

RUN npm run build

# Python backend stage

FROM python:3.11-slim AS backend-builder

WORKDIR /app

# Install system dependencies

RUN apt-get update && apt-get install -y \

gcc \

g++ \

&& rm -rf /var/lib/apt/lists/*

# Copy requirements and install Python dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Production stage

FROM python:3.11-slim AS production

WORKDIR /app

# Install runtime dependencies

RUN apt-get update && apt-get install -y \

curl \

&& rm -rf /var/lib/apt/lists/*

# Copy Python dependencies

COPY --from=backend-builder /usr/local/lib/python3.11/site-packages /usr/local/lib/python3.11/site-packages

COPY --from=backend-builder /usr/local/bin /usr/local/bin

# Copy built frontend

COPY --from=frontend-builder /app/frontend/dist ./static

# Copy backend source

COPY backend/ ./

# Create non-root user

RUN useradd --create-home --shell /bin/bash app

USER app

# Health check

HEALTHCHEK --interval=30s --timeout=30s --start-period=5s --retries=3 \

CMD curl -f http://localhost:8000/health || exit 1

# Expose port

EXPOSE 8000

# Start application

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "8000"]Integration with Trenddit Ecosystem

This development infrastructure integrates with Trenddit’s automation platform:

Trenddit Memo Integration:

- Shared Infrastructure: Common CI/CD patterns across all Trenddit products

- Cross-Platform Testing: Unified testing strategies for web and browser extension

- Performance Monitoring: Consistent monitoring and alerting across platforms

- Security Standards: Shared security scanning and compliance tools

Ecosystem Benefits:

- Unified DevOps: Consistent development workflows across all products

- Shared Learning: Infrastructure patterns transfer between projects

- Cost Optimization: Shared resources and tooling reduce operational costs

- Quality Assurance: Standardized testing and deployment practices

Next Steps and Advanced Topics

Continue building robust AI development infrastructure:

- AI-Friendly Frontend Architecture for UI patterns

- Technology Stack Optimization for performance

- Enterprise AI Development Workflows for scaling

By implementing these development tools and infrastructure patterns, teams can build reliable, scalable AI applications with confidence, ensuring smooth development workflows and robust production deployments.