Vibe Coding Workflow Fundamentals: Essential Patterns for AI-Assisted Development

Scroll to explore

Vibe coding represents a fundamental shift in software development methodology, where AI becomes an integral part of the development process. However, achieving production-quality results requires mastering essential workflow patterns, testing strategies, and architectural principles that ensure reliable, maintainable code.

This comprehensive guide explores the foundational elements that transform simple AI-assisted coding into professional development practices suitable for enterprise applications.

Core Workflow Activities

Configuration Management

Environment Setup and Consistency:

# .vibeconfig.py - Project configuration for AI development

class VibeConfig:

def __init__(self):

self.ai_model_preferences = {

'primary': 'claude-3.5-sonnet',

'fallback': 'gpt-4',

'code_review': 'claude-3.5-sonnet',

'documentation': 'gpt-4'

}

self.development_patterns = {

'test_driven': True,

'git_workflow': 'feature-branch',

'code_style': 'black + isort + flake8',

'documentation_level': 'comprehensive'

}

self.quality_gates = {

'test_coverage_minimum': 80,

'complexity_threshold': 10,

'security_scan': True,

'performance_benchmark': True

}Context Memory Management:

class DevelopmentContext:

def __init__(self, project_root: str):

self.project_root = Path(project_root)

self.context_file = self.project_root / '.vibe_context.json'

async def maintain_context(self, session_data: Dict) -> None:

"""Maintain development context across AI interactions"""

context = {

'current_feature': session_data.get('feature_name'),

'recent_changes': session_data.get('file_changes', []),

'open_issues': session_data.get('issues', []),

'testing_strategy': session_data.get('test_approach'),

'architectural_decisions': session_data.get('decisions', [])

}

await self.save_context(context)

async def get_context_for_ai(self) -> str:

"""Format context for AI consumption"""

context = await self.load_context()

return f"""

Current Development Context:

Feature: {context.get('current_feature', 'Not specified')}

Recent changes: {', '.join(context.get('recent_changes', []))}

Open issues: {context.get('open_issues', [])}

Testing approach: {context.get('testing_strategy', 'Not defined')}

Architectural decisions:

{self.format_decisions(context.get('architectural_decisions', []))}

"""Planning and Testing Framework Integration

Test-First Development Pattern:

class TestFirstWorkflow:

def __init__(self, ai_client):

self.ai = ai_client

self.test_framework = 'pytest'

async def implement_feature(self, feature_description: str) -> FeatureImplementation:

# Step 1: Generate comprehensive test cases

test_cases = await self.ai.generate_test_cases(f"""

Create comprehensive test cases for this feature:

{feature_description}

Include:

- Unit tests for individual functions

- Integration tests for component interaction

- Edge cases and error conditions

- Performance considerations

Use pytest framework with appropriate fixtures.

""")

# Step 2: Implement tests and verify they fail

test_files = await self.create_test_files(test_cases)

test_results = await self.run_tests(test_files)

if not self.all_tests_failed(test_results):

raise ValueError("Tests should fail initially (Red phase)")

# Step 3: Implement feature to pass tests

implementation = await self.ai.implement_feature(f"""

Implement this feature to pass the following tests:

Feature: {feature_description}

Test cases:

{test_cases}

Current test results:

{test_results}

Focus on:

- Making all tests pass

- Clean, readable code

- Proper error handling

- Performance efficiency

""")

# Step 4: Verify implementation

final_test_results = await self.run_tests_with_implementation(implementation)

return FeatureImplementation(

tests=test_files,

implementation=implementation,

test_results=final_test_results,

coverage_report=await self.generate_coverage_report(implementation)

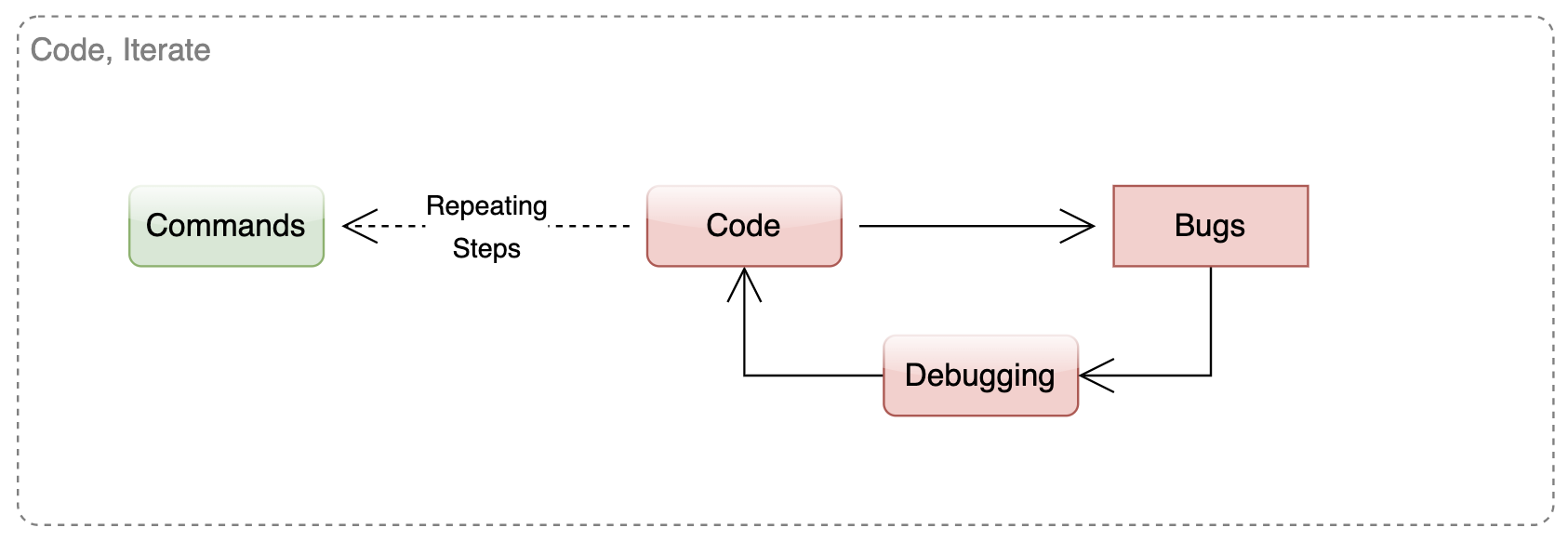

)Code Quality and Iteration

Continuous Improvement Workflow:

class CodeQualityWorkflow:

def __init__(self):

self.quality_checkers = [

StaticAnalyzer(),

SecurityScanner(),

PerformanceProfiler(),

DocumentationChecker()

]

async def iterate_for_quality(self, code: str, requirements: str) -> QualityImprovement:

iteration_count = 0

current_code = code

while iteration_count < 5: # Maximum 5 iterations

# Run quality checks

quality_report = await self.assess_code_quality(current_code)

if quality_report.overall_score >= 0.9:

break

# Generate improvement suggestions

improvements = await self.ai.suggest_improvements(f"""

Analyze this code and suggest specific improvements:

Code:

{current_code}

Requirements:

{requirements}

Quality Issues Found:

{quality_report.issues}

Focus on:

- Code readability and maintainability

- Performance optimization

- Security best practices

- Proper error handling

""")

# Apply improvements

current_code = await self.apply_improvements(current_code, improvements)

iteration_count += 1

return QualityImprovement(

final_code=current_code,

iterations=iteration_count,

quality_score=quality_report.overall_score,

improvements_applied=improvements

)Framework Integration Patterns

FastAPI + Reflex Architecture

Modern Web Application Pattern:

# Backend API with FastAPI

from fastapi import FastAPI, HTTPException, Depends

from fastapi.middleware.cors import CORSMiddleware

from sqlmodel import SQLModel, create_engine, Session

from typing import List, Optional

app = FastAPI(title="Vibe Coding Demo API")

# Database setup

DATABASE_URL = "postgresql://user:password@localhost/vibedb"

engine = create_engine(DATABASE_URL)

def get_session():

with Session(engine) as session:

yield session

# AI-generated endpoint with proper error handling

@app.post("/api/posts/", response_model=PostResponse)

async def create_post(

post: PostCreate,

session: Session = Depends(get_session)

) -> PostResponse:

"""

Create a new post with AI-assisted content validation.

This endpoint demonstrates vibe coding best practices:

- Type safety with Pydantic models

- Proper dependency injection

- Comprehensive error handling

- Automatic API documentation

"""

try:

# Validate content using AI

validation_result = await ai_content_validator.validate(post.content)

if not validation_result.is_valid:

raise HTTPException(

status_code=400,

detail=f"Content validation failed: {validation_result.reason}"

)

# Create post

db_post = Post(

title=post.title,

content=post.content,

author_id=post.author_id,

validated_at=datetime.utcnow()

)

session.add(db_post)

session.commit()

session.refresh(db_post)

return PostResponse.from_orm(db_post)

except ValidationError as e:

raise HTTPException(status_code=422, detail=str(e))

except Exception as e:

session.rollback()

raise HTTPException(status_code=500, detail="Internal server error")Frontend Integration with Reflex:

# Frontend with Reflex (Python-based React alternative)

import reflex as rx

from typing import List

class PostState(rx.State):

posts: List[Post] = []

current_post: str = ""

loading: bool = False

async def load_posts(self):

"""Load posts from API with error handling"""

self.loading = True

try:

async with httpx.AsyncClient() as client:

response = await client.get("/api/posts/")

response.raise_for_status()

self.posts = [Post(**post) for post in response.json()]

except httpx.HTTPError as e:

# Handle API errors gracefully

rx.toast.error(f"Failed to load posts: {str(e)}")

finally:

self.loading = False

async def create_post(self, form_data: dict):

"""Create new post with validation"""

if not form_data.get("title") or not form_data.get("content"):

rx.toast.error("Title and content are required")

return

try:

async with httpx.AsyncClient() as client:

response = await client.post("/api/posts/", json=form_data)

response.raise_for_status()

await self.load_posts() # Refresh posts

rx.toast.success("Post created successfully")

except httpx.HTTPError as e:

rx.toast.error(f"Failed to create post: {str(e)}")

def post_component(post: Post) -> rx.Component:

"""Reusable post component"""

return rx.box(

rx.heading(post.title, size="lg"),

rx.text(post.content),

rx.text(f"By {post.author}", color="gray"),

padding="1rem",

border="1px solid #e2e8f0",

border_radius="8px",

margin_bottom="1rem"

)

def app() -> rx.Component:

"""Main application component"""

return rx.box(

rx.heading("Vibe Coding Demo", size="xl", margin_bottom="2rem"),

# Loading indicator

rx.cond(

PostState.loading,

rx.spinner(),

rx.box()

),

# Posts list

rx.foreach(

PostState.posts,

post_component

),

# Load posts button

rx.button(

"Load Posts",

on_click=PostState.load_posts,

margin_top="1rem"

),

padding="2rem"

)Testing Framework Integration

Comprehensive Testing Strategy:

# test_post_api.py - Comprehensive test suite

import pytest

import asyncio

from httpx import AsyncClient

from fastapi.testclient import TestClient

from sqlmodel import Session, create_engine, SQLModel

from unittest.mock import Mock, patch

# Test configuration

@pytest.fixture(scope="session")

def test_engine():

"""Create test database engine"""

engine = create_engine("sqlite:///test.db")

SQLModel.metadata.create_all(engine)

return engine

@pytest.fixture

def test_session(test_engine):

"""Create test database session"""

with Session(test_engine) as session:

yield session

session.rollback()

@pytest.fixture

def test_client(test_session):

"""Create test client with dependency override"""

def get_test_session():

return test_session

app.dependency_overrides[get_session] = get_test_session

client = TestClient(app)

yield client

app.dependency_overrides.clear()

# Unit tests

class TestPostCreation:

"""Test post creation functionality"""

def test_create_valid_post(self, test_client):

"""Test creating a valid post"""

post_data = {

"title": "Test Post",

"content": "This is a test post content.",

"author_id": 1

}

with patch('ai_content_validator.validate') as mock_validator:

mock_validator.return_value.is_valid = True

response = test_client.post("/api/posts/", json=post_data)

assert response.status_code == 200

assert response.json()["title"] == "Test Post"

def test_create_invalid_post(self, test_client):

"""Test creating post with invalid content"""

post_data = {

"title": "Invalid Post",

"content": "This content fails validation.",

"author_id": 1

}

with patch('ai_content_validator.validate') as mock_validator:

mock_validator.return_value.is_valid = False

mock_validator.return_value.reason = "Content inappropriate"

response = test_client.post("/api/posts/", json=post_data)

assert response.status_code == 400

assert "Content validation failed" in response.json()["detail"]

# Integration tests

class TestPostWorkflow:

"""Test complete post workflow"""

@pytest.mark.asyncio

async def test_complete_post_lifecycle(self, test_client):

"""Test creating, reading, updating, and deleting a post"""

# Create post

create_response = test_client.post("/api/posts/", json={

"title": "Lifecycle Test",

"content": "Testing complete lifecycle.",

"author_id": 1

})

post_id = create_response.json()["id"]

# Read post

read_response = test_client.get(f"/api/posts/{post_id}")

assert read_response.status_code == 200

# Update post

update_response = test_client.put(f"/api/posts/{post_id}", json={

"title": "Updated Lifecycle Test",

"content": "Updated content."

})

assert update_response.status_code == 200

# Delete post

delete_response = test_client.delete(f"/api/posts/{post_id}")

assert delete_response.status_code == 200

# Performance tests

class TestPerformance:

"""Test API performance"""

def test_post_creation_performance(self, test_client):

"""Test post creation performance under load"""

import time

start_time = time.time()

# Create multiple posts concurrently

responses = []

for i in range(10):

response = test_client.post("/api/posts/", json={

"title": f"Performance Test {i}",

"content": f"Performance test content {i}.",

"author_id": 1

})

responses.append(response)

end_time = time.time()

# Verify all posts created successfully

for response in responses:

assert response.status_code == 200

# Verify performance threshold (under 1 second for 10 posts)

assert (end_time - start_time) < 1.0Production Deployment Patterns

CI/CD Integration

Automated Pipeline Configuration:

# .github/workflows/vibe-coding-ci.yml

name: Vibe Coding CI/CD

on:

push:

branches: [ main, develop ]

pull_request:

branches: [ main ]

jobs:

test:

runs-on: ubuntu-latest

services:

postgres:

image: postgres:13

env:

POSTGRES_PASSWORD: postgres

POSTGRES_DB: test_db

options: >-

--health-cmd pg_isready

--health-interval 10s

--health-timeout 5s

--health-retries 5

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install dependencies

run: |

pip install -r requirements.txt

pip install -r requirements-dev.txt

- name: Run tests with coverage

run: |

pytest --cov=. --cov-report=xml --cov-report=html

- name: Upload coverage to Codecov

uses: codecov/codecov-action@v3

- name: Run security scan

run: |

safety check

bandit -r .

- name: Run code quality checks

run: |

black --check .

isort --check-only .

flake8

mypy .

deploy:

needs: test

runs-on: ubuntu-latest

if: github.ref == 'refs/heads/main'

steps:

- uses: actions/checkout@v3

- name: Deploy to production

run: |

# Production deployment script

./deploy.shMonitoring and Observability

Production Monitoring Setup:

# monitoring.py - Production monitoring configuration

import logging

import structlog

from prometheus_client import Counter, Histogram, generate_latest

from fastapi import Request

import time

# Metrics

REQUEST_COUNT = Counter('http_requests_total', 'Total HTTP requests', ['method', 'endpoint', 'status'])

REQUEST_DURATION = Histogram('http_request_duration_seconds', 'HTTP request duration')

# Structured logging

structlog.configure(

processors=[

structlog.stdlib.filter_by_level,

structlog.stdlib.add_logger_name,

structlog.stdlib.add_log_level,

structlog.stdlib.PositionalArgumentsFormatter(),

structlog.processors.TimeStamper(fmt="iso"),

structlog.processors.StackInfoRenderer(),

structlog.processors.format_exc_info,

structlog.processors.UnicodeDecoder(),

structlog.processors.JSONRenderer()

],

context_class=dict,

logger_factory=structlog.stdlib.LoggerFactory(),

wrapper_class=structlog.stdlib.BoundLogger,

cache_logger_on_first_use=True,

)

logger = structlog.get_logger()

@app.middleware("http")

async def monitoring_middleware(request: Request, call_next):

"""Add monitoring to all requests"""

start_time = time.time()

# Log request start

logger.info(

"Request started",

method=request.method,

url=str(request.url),

client_ip=request.client.host

)

try:

response = await call_next(request)

# Record metrics

REQUEST_COUNT.labels(

method=request.method,

endpoint=request.url.path,

status=response.status_code

).inc()

duration = time.time() - start_time

REQUEST_DURATION.observe(duration)

# Log successful response

logger.info(

"Request completed",

method=request.method,

url=str(request.url),

status_code=response.status_code,

duration=duration

)

return response

except Exception as e:

# Log error

logger.error(

"Request failed",

method=request.method,

url=str(request.url),

error=str(e),

duration=time.time() - start_time

)

raise

@app.get("/metrics")

async def metrics():

"""Prometheus metrics endpoint"""

return Response(generate_latest(), media_type="text/plain")Integration with Trenddit Ecosystem

Unified Development Experience

These vibe coding fundamentals integrate seamlessly with the Trenddit ecosystem:

Trenddit Memo Integration:

- Development Documentation: Capture and organize coding patterns and solutions

- Knowledge Sharing: Share successful vibe coding patterns across team

- Learning Acceleration: Access to curated development best practices

- Context Management: Maintain development context across browser sessions

Ecosystem Benefits:

- Consistent Methodology: Unified approach across all Trenddit development

- Shared Learning: Cross-product insights improve development practices

- Quality Standards: Consistent quality gates and testing standards

- Automation Platform: Complete development automation solution

Advanced Patterns and Future Development

AI-Enhanced Development Tools

Intelligent Code Review:

class AICodeReviewer:

def __init__(self):

self.review_ai = AnthropicClient()

async def review_pull_request(self, pr_diff: str) -> CodeReviewResult:

review = await self.review_ai.generate_text(f"""

Perform a comprehensive code review of this pull request:

{pr_diff}

Focus on:

1. Code quality and maintainability

2. Security vulnerabilities

3. Performance implications

4. Testing coverage

5. Documentation completeness

6. Best practice adherence

Provide specific, actionable feedback with line references.

""")

return CodeReviewResult(

overall_score=self.extract_score(review),

comments=self.parse_comments(review),

approval_recommendation=self.extract_recommendation(review)

)Next Steps and Related Learning

Continue exploring vibe coding mastery:

- AI Automation Best Practices for advanced workflows

- Enterprise AI Development Workflows for scaling

- Lean AI Stack Selection for optimal technology choices

By mastering these vibe coding fundamentals, development teams can achieve the perfect balance of AI-assisted productivity and production-quality results. The key is establishing solid foundations that enable rapid iteration while maintaining code quality, testing rigor, and operational reliability.